Oh my. Here we go again with all the deathiness. Movie criticism keeps dying deader and deader. Film itself has keeled over and given up the ghost. Cinema ist kaput, and at the end of last month “movie culture” was pronounced almost as deceased as John Cleese’s parrot. Ex-parrot, I mean. Then the movie “Looper” came out, posing questions like: “What if you could go back in time? Would you kill cinema?” Or something like that.

People, this dying has gotta stop.

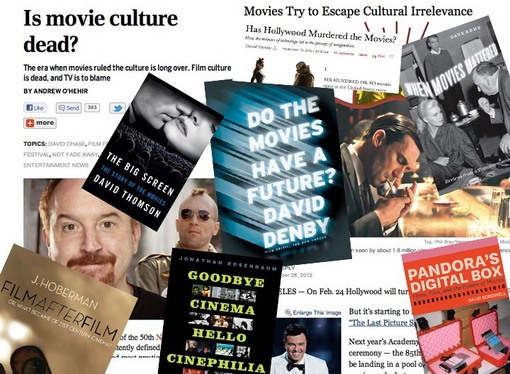

At Salon.com, Andrew O’Hehir asks “Is movie culture dead?” He concedes that he’s “overstating the case a little for dramatic effect,” but, he adds, “just a little.” His thesis is that cable television (from “The Sopranos” and “The Wire” to “Mad Men,” “Breaking Bad,” “Louie,” “Homeland,” “Game of Thrones,” “Justified,” “Damages,” “Sons of Anarchy”…) has eclipsed movies in the “national conversation” about style and substance in popular culture:

Film culture, at least in the sense people once used that phrase, is dead or dying. Back in what we might call the Susan Sontag era, discussion and debate about movies was often perceived as the icy-cool cutting edge of American intellectual life. Today it’s a moribund and desiccated leftover that’s been cut off from ordinary life, from the mainstream of pop culture and even from what remains of highbrow or intellectual culture. While this becomes most obvious when discussing an overtly elitist phenomenon like the NYFF, it’s also true on a bigger scale. Here are the last four best-picture winners at the Oscars: “The Artist,” “The King's Speech,” “The Hurt Locker” and “Slumdog Millionaire.” How much time have you spent, cumulatively, talking about those movies with your friends?

Well, he may have a point with those last two sentences — though I think it says more about the diminished stature of the Oscars than about the state of Sontagian “film culture.” This, however, is hardly new(s). Multiplexes and home video technology altered everything back in the 1980s, and have continued to do so ever since. Movies simply don’t stay in theaters long enough to make an impression on popular culture the way they once did, when a hit (mainstream or art-house) could play for months, allowing people to live with it, think about it, talk about it.

Now even the hits disappear in a few weeks, then appear in consumer video formats. People can watch them privately, but they are no longer “events.” Although “tent-pole” blockbusters are still sought (on the production side as well as the consumer side), the crowded supply chain ensures that very little has much impact. The movies may indeed have changed, but our relationship to them has been radically transformed since the 1960s.

That doesn’t bother me so much. Yes, mass culture is declining and we are becoming increasingly “niche-ified.” Does it really matter so much which format has more cultural currency at any given point in time — big-screen projections in large auditoria or HD presentations in homes? So what if television formats have eclipsed feature filmmaking in the popular imagination at this particular moment in history? Isn’t that just what happens as times and tastes change, art forms evolve, technologies mutate? Maybe the old categories of “film” and “television” are no longer so distinct. Maybe they’re blending together into new forms that aren’t differentiated by shape, size and length — two-hour continuous blocks versus one-hour installments spread out at regular intervals over weeks and months. Now we can watch movies and TV series as if we were reading books — all at once or in shorter sittings, and we determine when and how and for how long we want to do it.

A New York Times story headlined “Movies Try to Escape Cultural Irrelevance (Hollywood Seeks to Slow Cultural Shift to TV)” reports that the diminished stature of movies in the popular imagination has not gone unnoticed, not just by media and arts critics but in showbiz circles:

Several industry groups, including the Academy of Motion Picture Arts and Sciences, which awards the Oscars, and the nonprofit American Film Institute, which supports cinema, are privately brainstorming about starting public campaigns to convince people that movies still matter. […]

… [The] prospect that a film will embed itself into the cultural and historical consciousness of the American public in the way of “Gone With the Wind” or the “Godfather” series seems greatly diminished in an era when content is consumed in thinner slices, and the films that play broadly often lack depth.

The story quotes an anecdote from a Colorado State University student, who says that “the Academy helped break the connection between her generation and high-end movies in 2011 when it chose as Best Picture ‘The King’s Speech,’ which looked backward, rather than ‘The Social Network,’ which pushed ahead.” And while that’s a neat formulation, the vote tallies of the Academy don’t have much at all to do with cultural relevance, and probably haven’t at least since “Gandhi” beat “E.T. The Extra-Terrestrial” for best picture in 1982. (You may choose your own jump-the-shark Oscar moment.) And the movies’ subjects and settings (both “King’s Speech” and “The Social Network” are historical, but one is about the use of radio by an obsolete monarchy and the other is about the use of newer technology by college students) don’t have much to do with anything. Stylistically, both are fairly classical films. It’s just that there’s a world of difference between the artistic vision and skill of Tom Hooper and those of David Fincher.

O’Hehir regrets that the larger art film audience that helped create “the Mt. Rushmore Great Men of postwar art cinema – Bergman, Truffaut, Fellini, Kurosawa” (and, later, such post-Vietnam-era art-house titans as Malick, Herzog, Wenders, Lynch, Jarmusch, Kiarostami) has largely disappeared from the public arenas (restaurants, bars and coffee houses — and, as everybody keeps again and again, has migrated onto sites, blogs and forums like this one, as well as onto Facebook and Twitter. The most dramatic differences are geographical (you don’t have to live in an urban intellectual milieu to participate in serious discussions) and physical (while discussions may be public in that they’re conducted via “social media,” they aren’t in-person).

Nostalgia for old styles, forms, technologies, conventions, traditions is understandable, inevitable and valuable (to understand where we are and where we’re going, it’s crucial that we understand where we’ve been). But change is persistent and it would be fruitless to hang onto the “old ways” even if we could. Yes, movie culture isn’t what it used to be. It never was. I wouldn’t trade my education in film — horrible 16mm prints in classrooms and film societies, beat-up 35mm prints in revival houses — for anything. I took busses and walked miles to see a movie because I didn’t know when I’d get another chance, and movies meant all the more to me because of that imperative. And yet… now we have thousands of movies (mostly in gorgeous, pristine digital “prints”) available with a few clicks of the remote. And on an HD screen not much smaller than the ones at some of the art houses or campus screenings I’ve attended.

Recently, for reasons I probably don’t quite understand, I’ve felt a compulsion to see a bunch of gritty crime and espionage thrillers from the ’60s and ’70s (“The Ipcress File,” “The Laughing Policeman,” “Anderson Tapes,” “The Quiller Memorandum”) and, in my state of reverie, I thought: “Oh, it’s too bad that I’ll never again be able to revisit that time, go back and experience the feel of that period.” Well… yeah. It was as if I’d just become aware of that reality, and was experiencing the ache of that loss for the first time. You see, for a moment there I guess I thought I could, but I can’t. I regret the inaccessibility of those sensations, anyway — my younger, fresher, sharper senses and perspectives, as well as the everyday textures of the times and places themselves.

Like you, over the last few months I’ve read I don’t know how many articles and (at least sections of) books that in one form or another concern “the death of cinema,” or at least the fading of once-standard notions of what the cinema meant. Most of the books (which include compilations of reviews) are written by critics: Dave Kehr (When Movies Mattered” Reviews from a Transformative Decade), Jonathan Rosenbaum (Goodbye Cinema, Hello Cinephilia: Film Culture in Transition), J. Hoberman (Film After Film: Or, What Became of 21st Century Cinema?), David Denby (Do the Movies Have a Future?), David Bordwell (Pandora’s Digital Box: Films, Files, and the Future of Movies)… Look at those titles. You may get the impression that something is going on around these parts.

But let’s not panic. We’ve seen this kind of thing before. Technology has always shaped the dominant forms of popular culture. The very idea that music, pictures, performances of any kind could be created, recorded and reproduced ad infinitum is still quite new. Rock music was an AM radio phenomenon in the ’50s and ’60s (top 40 hits on dashboard and transistor radios), based on sales of 7-inch, 45 rpm singles before it became FM’s Album-oriented rock, devoted to 12-inch, 33 1/3 LPs. Singles had an “A” side and a “B” side — the featured tune, backed with another song (sometimes not on any album) that, occasionally, became equally (or more) popular. Albums were sequenced to be played one side at a time, but CDs were programmed for continuous play (though it was also easier to program them to play tracks in any order). MP3s and other digital, downloadable formats have put the emphasis back on single tracks again. (We won’t even get into music videos, a once-dominant promotional format that now attracts more attention as a DIY YouTube phenomenon, and that otherwise seems like a quaint relic from another era.)

Now, TV and movies are available not only on discs (a format whose days are numbered) but as digital downloads and streams on cable and satellite On Demand services, Netflix, Amazon Instant, iTunes, Vudu, Fandor, MUBI… You can watch entire seasons of shows in sequence. And the availability of non-broadcast programming (HBO, Showtime, FX, AMC…) coupled with the advent of large-screen HDTVs has resulted in television that’s fully cinematic in direction, photography and production values. Feature-length films may engage in long-form storytelling across several sequels/prequels, one chunk at a time (released a year or more apart), but television allows for extended narrative development, exploration and experimentation in shorter installments but spread out at even greater length with intricacy and sophistication.

Part of this has to do with the control we can now exercise over a medium that used to simply happen before our eyes. And the attention to detail that comes with it, as we are able to repeat, pause, rewind our disc players and DVRs — and then discuss what we’ve seen/discovered online. I think of “Twin Peaks” as being the first “Internet” show, its birth roughly coinciding with the creation of the early World Wide Web.

Even back in the early 1990s, VCRs and Usenet discussion groups, were essential components of the show, as owl-eyed watchers collected clues (many tracing back to Otto Preminger’s 1944 “Laura“) and working out theories about Who Killed Laura Palmer. I still think “Twin Peaks” is the crowning achievement of David Lynch’s film career, even though others directed many episodes, including names that are now familiar from “The Sopranos,” “Deadwood,” “Mad Men,” “Breaking Bad,” “Sons of Anarchy,” etc. In his 2012 book The Big Screen, critic David Thomson cited “someone who has a case for being the most effective director of his time”:

He was born in 1959 and his name is Tim Van Patten. There is a fair chance you have not heard of him, a reminder that, with television, you are not always watching, even if the set is on and your gaze is more or less given to the screen. Van Patten has never made a theatrical movie, but he has directed some of the best material of our time, twenty episodes of “The Sopranos,” more than anyone else. That’s about sixteen hours of film (let’s say eight feature films) in the years from 1999 to 2007. There are few movie directors who worked that steadily in those years for the big screen, and Van Patten was doing other work, too, as a director for hire (episodes of “Deadwood,” “The Wire,” and “Sex and the City,” among others).

Television may still be more of a writer-creator-showrunner’s medium (David Chase was unquestionably the auteur of “The Sopranos,” as David Simon is of “The Wire,” Matthew Weiner of “Mad Men,” Vince Gilligan of “Breaking Bad,” Louis CK of “Louie,” and so on), but it wasn’t long before I perked up upon seeing Tim Van Patten’s name at the beginning of a “Sopranos” episode (or, later, other HBO series, from “The Wire” to “Game of Thrones”).

Thomson is wrong in his assumption that television is still a passively absorbed medium, however. I’m sure there are still people who leave the “boob tube” on all the time as background noise for company (and to drown out “the voices“), and people who “watch TV” without much caring what’s on. (Bruce Springsteen was either being conservative or naïve in 1992 when he sang “57 Channels (and Nothin’ On).”) But there are (or were) people who just liked to “go to the movies,” too — and whatever was playing didn’t matter so much. With DVRs, DVDs, Netflix and other technologies now, cinema (in some form) can happen just about any time and anywhere.

I’ve written a lot about the changes wrought by home video, which changed our conception of film and television from experiences in space and time (they could only be seen at a scheduled hour in a particular place) to physical objects (the cassette, the disc). Now we’ll conceive of them as files, sitting on servers somewhere in “the cloud” and summoned to our devices (HDTVs, iPads, laptops, smartphones) via remote.

But these devices, in concert with digital communications previously undreamed of, also allow us to study and immerse ourselves in “audio-visual programming” like never before. In one section of witty and observant blog dedicated to the fashions of “Mad Men” and how they are used to further the story and develop characters, proprietors Tom & Lorenzo notice something I probably would never have picked up on in “The Other Woman” (S03E11) when Joan keeps her assignation with the Jaguar executive:

And there it is: the outfit that caused us to gasp out loud. That is, of course, the fur that Roger gave her back in 1954; the one that caused her to coo “When I wear it, I’ll always remember the night I got it.” Well, fuck you, Roger Sterling. That’s EXACTLY what this outfit is saying. “You ruined what we had by letting me do this, so I’m ruining what you gave me.” We’d be surprised if she ever wore it again. It’s one of those beautiful costuming moments that takes a sad, horrifying scene and makes it even more so once you realize what she’s wearing.

This scene mimics the scene in “Waldorf Stories” [S04E06] when she got the mink. She’s in a hotel room, in a tight black dress showing a lot of cleavage, wearing a mink and accepting a gift from a man…

Long-form stories make it possible to get into depths and details of characters and their history. Many shows are plotted out a full season at a time, so that each character and storyline has a meaningful shape to it, rather than the episodes just being one-offs as in the old days of network shows when it was rare to introduce a storyline that extended into a second or third installment. In the final episode of Season 3 of “Louie” a character played by Parker Posey in two earlier episodes mysteriously reappears in a most disturbing context. There’s no explanation, no attempt to re-introduce her. It’s expected that you will remember her, know what she means to Louis, and have feelings about her yourself.

In the mid-season finale of the fifth and final season of “Breaking Bad” (the last of eight, to be followed by eight more in 2013), there’s a deeply unsettling scene on the patio of Walter White’s home, out by the pool. The location has a lot of resonance in the show because of story- and character-defining events that have taken place there, and there’s good reason to feel apprehensive about what could happen on this otherwise ordinary occasion. The longer it goes on, the more the tension builds. When the payoff does arrive, it comes from out of the blue. And it begins by reprising several shots from the opening of the first episode of the second season (“Seven Thirty Seven”) — an ominous collection of strange, almost surreal images (a dripping garden hose, a wind chime, a bug) that weren’t put into context until a sudden catastrophic event in the final episode of that season.

How many movies are put together with that kind of detail? No wonder people would rather talk about good TV. Some of the best series have made imaginative use of memory, dreams and other altered states of consciousness. It’s a long way from the rude awakening gimmick of ’80s soaper “Dallas” to the haunting Bergmanesque dreams and visions of Tony Soprano.

“Louie” slips into dreamlike passages that aren’t necessarily called out as dreams — they’re just part of the texture of the show, reflecting his fears and desires, as when he steals an ice cream cone from a kid and then jumps into a nearby helicopter to escape; or finds himself lost in New Jersey, a long, dark nightmare out of “Eyes Wide Shut” or “After Hours.” And, as in the enigmatic search for the Yangtze River in the finale of the third season, the results can feel more like Apichatpong Weerasethakul or Jia Jhang-ke. (There’s even a wonderful Three Gorges Dam sight gag that goes unexplained.)

O’Hehir writes: “… It’s just that there’s no point in pretending that movies play the same dominant role in our culture that they once did or that art-house movies of the sort the [New York Film Festival] so lovingly curates have any impact at all on the American cultural mainstream.” Instead, he says, 2012 movies like “Moonrise Kingdom” or “Beasts of the Southern Wild” “briefly get hyped by people like me and then sink without a trace.” True, but again, that’s not new(s). It always takes these things a while — months, years — before the influence of outlier culture seeps into the mainstream.

Talismanic styles and customs created by a countercultural (what we used to call “anti-establishment”) elite on the fringes of society (and that includes low culture as well as high) are eventually, inevitably assimilated into the larger orthodoxy. Um, by which I mean: Cool, subversive, cutting-edge works, and the movements associated with them, have always been co-opted and absorbed into consumer culture. Look what happened to the Beats, to long hair, to Flower Power, to pop art, to punk, to hip-hop, to drag…

That’s the way it works: any one-time “outsider” element — the rebellious, the progressive, the subversive, the avant garde — is simply subsumed and repackaged as a salable commodity. It’s the story of our times, in which the dominant social and political paradigm has become “the marketplace.” At Microsoft (the first place I heard this particular term) this phenomenon was known as “embrace, extend and extinguish.” Wikipedia summarizes it this way:

1) Embrace: Development of software substantially compatible with a competing product, or implementing a public standard.

2) Extend: Addition and promotion of features not supported by the competing product or part of the standard, creating interoperability problems for customers who try to use the ‘simple’ standard.

3) Extinguish: When extensions become a de facto standard because of their dominant market share, they marginalize competitors that do not or cannot support the new extensions.

“Easy Rider” made Hollywood backlot shooting seem obsolete almost overnight, extending the influence of the French New Wave in taking handheld cameras into the streets. Richard T. Jameson wrote a piece about this earlier changing of the guard, circa 1970, when he saw Billy Wilder’s “The Private Life of Sherlock Holmes” with seven other people in an engagement that only lasted one week (while, across the street, “Love Story” was packing ’em in):

[The] film’s complete failure in 1970 was, in several respects, definitive of that moment in film history.

For one thing, “Holmes” was just the sort of sumptuously appointed, nostalgically couched superproduction that once would have seemed tailor-made to rule the holiday season. Only two Christmases before, Carol Reed’s “Oliver!” had scored a substantial hit, and gone on to win Academy Awards for itself and its director (a “fallen idol” two decades past his prime). Yet in 1969-70, the mid-Sixties vogue for three- and four-hour roadshows – reserved-seat special attractions with souvenir programmes and intermissions – abruptly bottomed out. Indeed, after witnessing such box-office debacles (and lousy movies) as “Star” and “Paint Your Wagon,” United Artists demanded that Wilder shorten his film by nearly an hour before they would release it at all.

But for the buying public, length wasn’t the issue. This was a new era, defined by the “youth culture” and Vietnam War protests, by the X-rated urban fable “Midnight Cowboy” (John Schlesinger, 1969), and by the political-picaresque “Easy Rider” (Dennis Hopper, 1969) – especially the latter, a road movie made entirely on the road, a triumph of that ministudio-on-wheels, the Cinemobile. In such a climate, the U.S. audience couldn’t have been less interested in a passionately romantic meditation on a 19th-century icon realized principally on exquisitely dressed sets of 221B Baker Street, the Diogenes Club, and a Scottish castle with a mechanical monster in the cellarage. (A year earlier, Italian maestro Sergio Leone had found U.S. audiences similarly inhospitable to his equally passionate, equally romantic meditation on the 19th-century West and also on classical Hollywood filmmaking, “Once Upon a Time in the West,” even if its politics were at least as contemporary as those of “Easy Rider.” Like Holmes, “Once Upon a Time in the West” would also eventually be embraced as a masterpiece.)

Bob Dylan was only part right when he wrote, in a song performed by Roger McGuinn on the “Easy Rider” soundtrack, that “he not busy being born is busy dying.” It’s never just one or the other; both are always underway simultaneously. Nostalgia for the way things used to be is fine — even important, if we’re to value the achievements that got us where we are today. And progress is always accompanied by loss. We shouldn’t lose sight of the truth — that there’s plenty of room in the cinema (if not in the cinemas) for the old and the new, “Easy Rider” and “The Private Life of Sherlock Holmes,” “Mad Men” and “Once Upon a Time in Anatolia,” “The Avengers” and “Justified,” “Louie” and “Uncle Boonmee Who Can Recall His Past Lives.”

My feeling is: Let’s see where the new formats, technologies and possibilities take us. We’ll always miss the forms of the past, from silent movies to TV variety shows. But other things rise in prominence and relevance, only to themselves be supplanted — either by newer, unforeseen forms, or by old ones cycling back around. The Western was considered “dead” for a good long time, commercially unviable. It’s no longer the ubiquitous genre it once was, in film and television, but since “Unforgiven” it’s become just another kind of story (“Brokeback Mountain,” “3:10 to Yuma,” “The Assassination of Jesse James by the Coward Robert Ford,” “Broken Trail,” “The Three Burials of Melquiades Estrada,” “No Country for Old Men“).

With each new paradigm we lose and we gain. It’s still the same old story. And it’s not the end of the cinema.