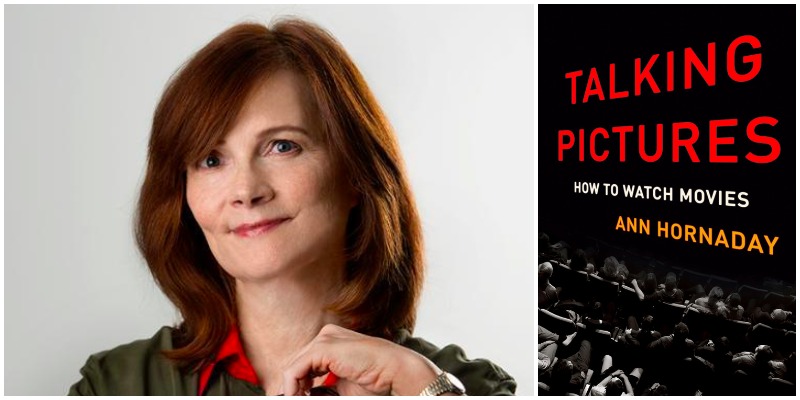

In her captivating new book, Talking Pictures, Washington Post movie critic Ann Hornaday says that a movie will teach you how to watch it. She explains how the thousands of small and large choices made by the writers, actors, cinematographers, production designers, composers, editors, and directors create the ultimate immersive creative experience. And, indirectly, she ends up bringing critics into the discussion as well. “The job of the critic,” she writes, is to recognize these connections and disruptions, not in order to be pedantic or superior to the reader, but to open up interpretive possibilities that will enrich the viewing experience or at least provide some thought-provoking reading.” (Talking Pictures will be released on June 13; Click here to pre-order your copy.)

The book is filled with illuminating insights from Hornaday’s interviews with filmmakers and from her own perceptive response to the movies she has seen and written about. In an interview with RogerEbert.com, she discussed some of the topics in the book, from her problems with 3D to the issue of spoilers.

When a movie is based on a book, should you read the book before seeing the film?

Ah, start with an easy one, why don’t you?! This is a perennial conundrum for critics. My answer is “No,” if only because so many movies today are based on books—and graphic novels, comics, video games and other pre-existing properties—that it’s impossible to experience every story in its original form before it becomes a film. But I also think it’s important philosophically to judge a film on its own merits, as a freestanding piece of cinema, that works or doesn’t work according to those aesthetic/narrative principles.

In the case of something like “Harry Potter” or “The Lord of the Rings,” if the critic doesn’t have time to read those books, he or she should at least cultivate the opinion of hardcore experts who have seen the movie, to gauge its success in fan service. I don’t think that group should dictate whether something succeeds or fails, but their expectations deserve at least to be taken into account.

Why are you so critical of 3D? You discuss the benefits of technological advances like the 5.1 sound system—what other technological advances do you think have been used most effectively in movies?

With very few exceptions I really have come to despise 3D. I think it rarely adds anything to the image, and it often detracts, in terms of brightness, detail, color and legibility. Although I thought it was put to good use in “Hugo”—as well as “Cave of Forgotten Dreams”—now 3D is being used simply for depth of field, which Gregg Toland perfected with the good old-fashioned movie camera. I just think 3D is more of a gimmick and cash-grab than genuine aesthetic advance. As for other technological feats, I’ve become more and more impressed with the combination CGI-live action space, which began in the “Uncanny Valley” and now has given us such ravishing immersive experiences as the newest “Jungle Book” and “Doctor Strange.” The romantic in me still loves practical effects (stay strong, Christopher Nolan), but CGI has really grown by leaps and bounds the past few years.

How do you decide whether a detail is essential to a review or too much of a spoiler to include? What about a more general survey or analysis written months after a film’s release—does the calculus on spoilers change?

One of the best lessons I learned as a newly minted critic was when Elizabeth Peters, who was running Rick Linklater’s Austin Film Society, called me at the Austin American-Statesman and very gently and diplomatically took me to task for synopsizing too much of the plot on one of my reviews. It wasn’t just spoilers, it was the idea that, if someone should decide to go to that movie, they deserved to enjoy the same sense of discovery that I did as a first-time viewer. I realized in that moment that synopsis is the final refuge for the lazy critic. I try to avoid it at all costs, and instead convey the tone, mood and general gestalt of a movie, the better to prepare readers for the experience rather than have it for them. Of course, one or two revelatory details can be helpful in that regard—I try to put myself in the viewer’s place, in terms of whether my delight in it would be diminished by knowing about it beforehand; I also consider whether it’s been revealed in trailers. And I consult with my editors and fellow reviewers, who I’m happy to say, take the issue seriously as I do. Even months after release, I try to remain spoiler-free, especially if I’m doing something for a wide, general audience. Why ruin something unnecessarily?

How has the widespread availability of films from every era changed the way today’s audiences watch movies?

I wish I knew. I’d like to say that it’s made us all more cine-literate, but as we saw in a recent Twitter meme, a game show contestant couldn’t identify “A Streetcar Named Desire” with only one letter missing from the title.

Why is it that you can always immediately tell the difference between a movie made in the past and a new movie set in the past?

I guess off the top I’d say the means of production have changed, as have their aesthetic artifacts. This gets into a notorious film-nerdy debate among film fans about grain—that visible texture we see on old black and white films—and whether it’s a bug or a feature. I’m one of those sentimentalists who recoils when a 1940s film is “cleaned up” and made to look more modern; even though the less grainy version is probably what the filmmaker would have intended at the time. Similarly, our ideas of what the past properly “looks” like have been conditioned by films like “Days of Heaven,” “McCabe & Mrs. Miller” and “Lincoln.” One of the reasons why Michael Mann’s “Public Enemies” didn’t work—for me—was that the high-def digital “live TV” look of the film felt completely at odds with the Depression-era period. But Mann’s aim was precisely to make the past more immediate. So I guess it comes down to taste.

To your question: Even something like Soderbergh’s “The Good German,” which sought to re-create World War II-era movies in terms of grain structure and aspect ratio, still managed to look obviously modern, not least because of the recognizable presence of George Clooney. Still an enlightening exercise, though. I guess an economist would say the answer is “over-determined.”

How does our familiarity with particular performers affect the way we watch their films, for better and/or worse?

This is an enduringly fascinating question for me. How much can, or should we banish, what we know of actors off-screen—and exponentially more difficult enterprise with our 24/7 “Stars, they’re just like us!” gossip culture? I think good directors are aware of those associations, accept them and leverage them to help the characterization on screen. (Good example: Angelina Jolie in “Maleficent”; bad example: Angelina Jolie in “Changeling.”)

This is also where good screenwriting comes in: With a really specific and well-developed screenplay, the actor has the tools he or she needs to build a fully realized character and merge with it, even if they don’t disappear entirely. So, yes, that’s Casey Affleck and Michelle Williams up there in “Manchester by the Sea,” we recognize them from other movies and red carpet appearances, but their characters are so grounded and filled out that they genuinely seem to become someone else, while being themselves. It’s a type of cognitive dissonance that’s difficult to describe!

Should people read reviews before or after seeing the film?

Both! Please visit WashingtonPost.com and RogerEbert.com early and often!

How has digital and social media changed the way audiences decide what to see and how to think about it?

This is probably above my pay grade. But I note with interest the Motion Picture Association of America’s recent theatrical statistics report indicating that moviegoing among young people is up—suggesting that review aggregators like Rotten Tomatoes and Metacritic, as well as social media, are helping to drive business, especially to genre films with strong communities of affiliation. As we’ve discovered at the Post, technology is also helping foster a continuing conversation about the films long after they’ve opened, as we’ve seen with “Get Out.”

Do awards like the Oscars or Golden Globes help bring the attention of the audience to quality films or do they create a skewed notion of “prestige”?

I think for the most part they’ve been a force for good in providing “earned awareness” for movies that otherwise couldn’t afford huge marketing campaigns, and with which studios otherwise wouldn’t bother. We’ll always have what our colleagues call “Oscar bait” movies—genteel historical dramas, usually featuring well-heeled British actors in a worthy but not necessarily daring or cinematically expressive story. But I’ve come to see those films through the eyes of readers who value them for what they are. My current obsession is figuring out a way for the Academy and the studios to spread these films out across all 12 months of the year instead of just awards season, which creates a logjam, is hard on filmgoers and inevitably results in some good movies being unfairly crowded out (I would include such gems as “Loving” and “20th Century Women” in that category from last year).

The highlights of the book include some exceptionally insightful comments from your interviews with filmmakers. Whose answers surprised you the most and what has speaking to filmmakers taught you about how to watch a movie?

I really learned how to be a critic on the job, as a freelance reporter in New York and, later, writing features as a critic. I didn’t go to film school and only took film history and filmmaking late in life, during an arts journalism fellowship. So writers, directors, actors and below-the-line artisans have been enormously helpful in guiding my eye and my thinking about movies. I’m not sure anyone has surprised me that much … Although Nicolas Winding Refn has always been refreshingly candid (he’s the one who told me that, in the digital era, “the color timer is the new gaffer.”). Interviewing filmmakers about how they go about doing their jobs has given me great respect for film as a collaborative medium. I still think it’s mostly a director’s medium, but I see the director’s job as leading an entire creative team, each member of which makes discrete, make-or-break creative contributions to the finished whole.

How do you as a critic maintain a balance between allowing yourself to get caught up in a movie and analyzing the way it achieves (or does not achieve) its story-telling goals?

That’s the mental yoga of film reviewing! I really do try to let myself succumb to every film I see, because I think that’s only fair. But I do take a notebook along with me, so I can jot down what seems to be working or not at any given moment. Of course, that’s harder when something is really sweeping you along; but then I’ll try to kind of snap out of my trance for very brief interludes, so I can take a step back and be able to explain how the filmmakers are casting their particular spell. This is why I tend not to hang out and chat with my fellow critics after a screening; I use that time to let a film sink in, think about it, go over it in my mind and basically internalize it. I remember not understanding “Magnolia” at all immediately after seeing it, but by the time I’d walked back to the Baltimore Sun newsroom, I’d fallen in love with it.

Should Hollywood stop producing so many remakes and sequels?

The glib answer is: “Yes! Next question!” But look at how well Disney has done with remakes and sequels. They understand, perhaps better than any studio right now, that all movies—even comic-book movies or sure-fire “Star Wars” reboots—are execution dependent. They all need to be brilliantly written, perfectly cast and executed with skill and lack of condescension to the audience. So I’d say Hollywood needs to stop producing so many lazy remakes and sequels.

What’s the difference between an actor and a movie star? Who qualifies as both?

I guess what we’re talking about is a character actor—the actor whose name might not be a household word, and who disappears into whatever person they’re playing—versus an actor whose persona extends beyond the screen to what we know of them as larger-than-life public/private figures. I honestly think most of our movie stars are good actors (I’m thinking of Angelina Jolie, Johnny Depp, Brad Pitt), but the trick is finding those roles that mesh seamlessly with audience expectations. I actually think Tom Cruise is an excellent actor (op cit “Magnolia”), especially when he’s addressing his own star persona, which he did so cleverly in the criminally under-seen “Edge of Tomorrow.” I’d also put Denzel Washington in that category of stars-who-can-also-act. He’s carved out a wonderful career of being able to open a movie purely on the basis of his presence, but also delivering specific, technically nuanced performances.

To pre-order your copy of Ann Hornaday’s Talking Pictures, click here.